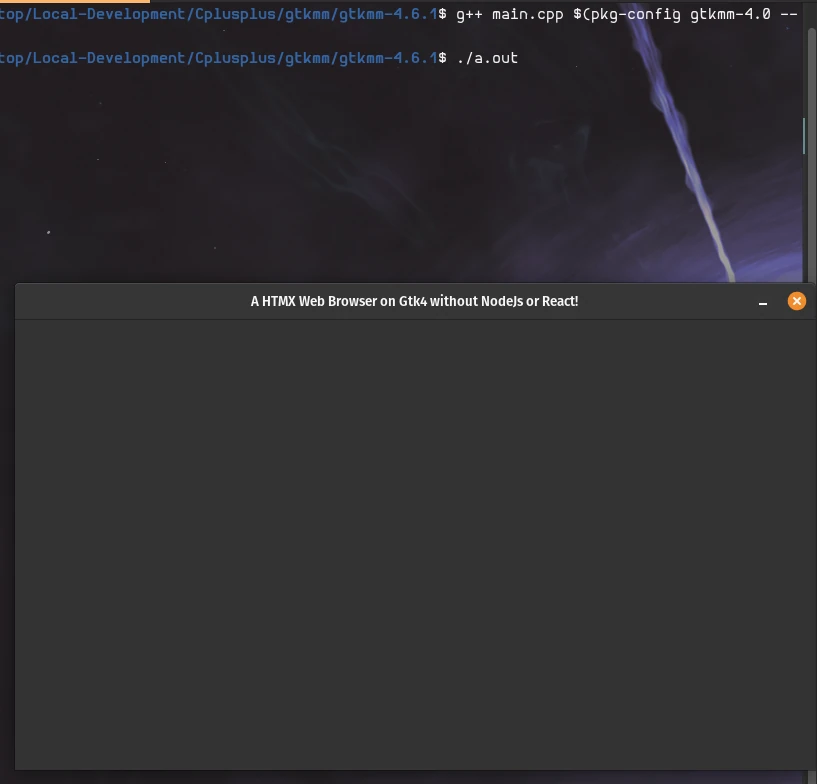

Archive, Compress & Decompress Huge Files via zstd, tar, and with a progress indicator via pv

Extreme speeds of efficiently backing up and organizing our information and drives on FAT32 drives!

The following tutorial is how we backup our drives in an efficient means with zstd and tar via a Linux operating system or powershell if you are using Windows.

We archived, compressed, decompressed and untar'd 120GB of data within 20 minutes!

Package Requirements:

- tar

- pv

- zstd

- dosfsck

option 1: A simpler way of compressing a tar is

pv Desktop.tar | zstd >> Desktop.zst

Check the filesize in chunks for the FAT32 drive

sudo dosfsck -v -n /dev/sda1

**File size is 4294967295 bytes, cluster chain length is 0 bytes.**

option 2: Split the tar into files to transport between drives e.g. FAT32 formatted drive and filter/compress with zstd with -I option into another drive, while visualizing a progress counter with pv.

tar -I zstd -cf - /home | (pv -p --timer --rate --bytes | split --bytes=4294967295 - /run/media/Backups/System/Linux/2024/Home.backup.tar.zst)

option 3: Another example backing up multiple files/folders in our home directory:

tar -I zstd -cf - Documents/ Downloads/ Music/ Pictures/ Public/ Templates/ Videos/ .ssh/ .bashrc .gitconfig .nvidia-settings-rc | (pv -p --timer --rate --bytes | split --bytes=4294967295 - /run/media/Backups/System/Linux/2024/Home.backup.tar.zst)

Decompress multiple *.zst* files into a tar

cat *.zst* | zstd -d > /home/odinzu/dest.tar

Extract the tar into proper location

tar -xvf dest.tar

or extract the archive into specific directory

tar -xvf dest.tar -C /opt/files

Resources

- Github Zstd Issue: https://github.com/facebook/zstd/issues/4014#issuecomment-2032786590